Kubernetes Autoscaling Explained: From Metrics to Real-World Use Cases

Kapstan Kapstan

08 May, 2025

7 mins read

25

Kapstan Kapstan

08 May, 2025

7 mins read

25

Managing cloud infrastructure at scale demands intelligent resource optimization. As Kubernetes has become the de facto standard for container orchestration, it’s essential to understand how Kubernetes Autoscaling can dramatically improve efficiency, reliability, and cost control in your deployments. In this guide, we'll walk through the core concepts behind autoscaling in Kubernetes, break down the types of autoscalers, and explore how companies like Kapstan use these tools in real-world scenarios.

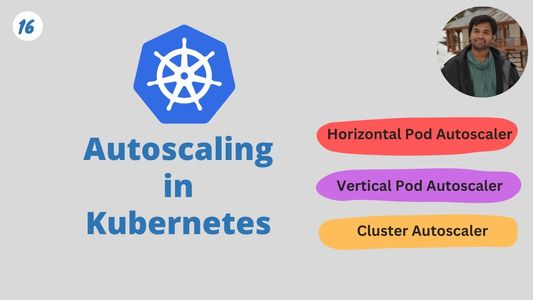

What is Kubernetes Autoscaling?

Kubernetes Autoscaling refers to the automated adjustment of resources—such as pods and nodes—in response to changing workloads. Rather than provisioning resources manually, kubernetes autoscaling allows your applications to respond to real-time metrics like CPU usage, memory consumption, and custom signals. This leads to better resource utilization, lower operational overhead, and improved application performance.

There are three primary components of Kubernetes Autoscaling:

- Horizontal Pod Autoscaler (HPA)

- Vertical Pod Autoscaler (VPA)

- Cluster Autoscaler (CA)

Each component plays a specific role in scaling applications effectively.

1. Horizontal Pod Autoscaler (HPA)

HPA automatically increases or decreases the number of pod replicas based on CPU or other selected metrics. For example, if an application sees a spike in CPU usage, HPA can scale from 2 pods to 10 to meet demand, then scale back down during quieter periods.

Key Features:

- Reactive to real-time load

- Configurable thresholds (e.g., target CPU utilization)

- Integrates with Kubernetes metrics server

Best for: Stateless applications and APIs with fluctuating traffic.

2. Vertical Pod Autoscaler (VPA)

VPA adjusts the CPU and memory requests/limits of individual pods. Unlike HPA, which changes the number of pods, VPA changes the size of each pod.

Key Features:

- Analyzes historical and live metrics

- Recommends or automatically applies resource requests

- Can restart pods to apply new values

Best for: Long-running batch jobs or backend services where right-sizing improves efficiency.

3. Cluster Autoscaler (CA)

Cluster Autoscaler dynamically adjusts the number of nodes in your cluster based on pending pods that cannot be scheduled due to resource constraints.

Key Features:

- Scales nodes up or down automatically

- Works with major cloud providers like AWS, GCP, and Azure

- Deallocates idle nodes

Best for: Managing cost and performance in large-scale, multi-tenant Kubernetes clusters.

Real-World Use Case: How Kapstan Optimizes with Kubernetes Autoscaling

At Kapstan, we specialize in helping startups and enterprise teams streamline their cloud-native operations. One of our SaaS clients faced unpredictable usage patterns due to high variability in user demand. Their infrastructure was overprovisioned to handle peak traffic, leading to unnecessary costs during off-peak hours.

Solution with Kubernetes Autoscaling:

- We implemented HPA on their frontend API service based on CPU and request rate.

- For database processing workers, we used VPA to fine-tune memory and CPU settings.

- The Cluster Autoscaler was deployed to scale the underlying EC2 instances based on workload.

Results:

- 38% reduction in monthly cloud infrastructure costs

- Improved app response time by 22% during peak loads

- Zero downtime during scaling events

Kubernetes Autoscaling provided the flexibility and control they needed to match infrastructure to demand—without manual intervention.

Key Metrics that Drive Autoscaling

To set up autoscaling effectively, you must monitor and understand relevant metrics:

- CPU Utilization: Most common trigger for HPA.

- Memory Usage: Especially important for VPA decisions.

- Custom Metrics: Business logic-specific metrics like request latency or queue length.

- Pod Scheduling Failures: A signal that Cluster Autoscaler might be needed.

Tools like Prometheus, Datadog, and Kubernetes Metrics Server are essential for collecting and visualizing these metrics.

Best Practices for Kubernetes Autoscaling

- Set Realistic Thresholds: Avoid aggressive scaling policies that may cause instability.

- Use Cooldown Periods: Prevent frequent scaling events that can lead to thrashing.

- Monitor Continuously: Use observability tools to track scaling behavior and refine settings.

- Combine HPA + VPA Carefully: They can conflict; use them on different workloads or configure cautiously.

- Test Before Production: Use load tests to simulate traffic and observe autoscaler behavior.

Final Thoughts

Kubernetes Autoscaling is not just a "nice to have"—it's a fundamental capability for any business looking to scale applications efficiently in the cloud. When properly implemented, it leads to better user experiences, optimized costs, and more resilient systems.

At Kapstan, we help organizations unlock the full power of Kubernetes through tailored infrastructure solutions, including autoscaling strategies. Whether you're new to Kubernetes or optimizing a mature cluster, our team can guide you toward scalable, intelligent cloud operations.

Written By:

Kapstan Kapstan

Hotels at your convenience

Now choose your stay according to your preference. From finding a place for your dream destination or a mere weekend getaway to business accommodations or brief stay, we have got you covered. Explore hotels as per your mood.